Invisible Changes Coming to Sputter

I have a confession to make: The code for audio and sequencer logic in Sputter is really unoptimized. According to the holy grail of audio programming, and a lot of other general optimization advice, pretty much everything is done wrong. This means that it uses more CPU power and battery life than is really needed. Audio latency is also unreasonably long, which is evident when notes are played back as they are inserted in the grid.

But Sputter works fine for me!

In practice Sputter works pretty well on most recent hardware. Latency is not that much of a pain point since it is a sequencer and not a live instrument. However, there are some limitations which probably will become obvious in upcoming versions:

- Sputter works not so great on low end hardware. This worries me with regards to releasing a free version since those typically get more downloads from cheap devices.

- Drum pads and piano roll, which are planned features, will benefit greatly from low latency.

- Perhaps Sputter could run on cheap embedded hardware like the Raspberry Pi Zero 2 W?

Another thing to consider is stability. After tackling a couple of embarrasing faults Sputter is now pretty stable and crash reports are few and far between. As soon as I touch any of the sequencer logic code, though, bugs might reoccur.

A good way to achieve a modifiable and robust code base is to write it using test-driven development. That way all the functionalities in the sequencer logic code will have corresponding automated tests, so if a change in the code results in something else breaking it will be discovered immediately.

To accomplish proper test-driven development it is necessary to do so from the start and it is hard to retrofit it after the fact. Therefore this is a good reason to rewrite the sequencer logic code.

Then what’s the plan?

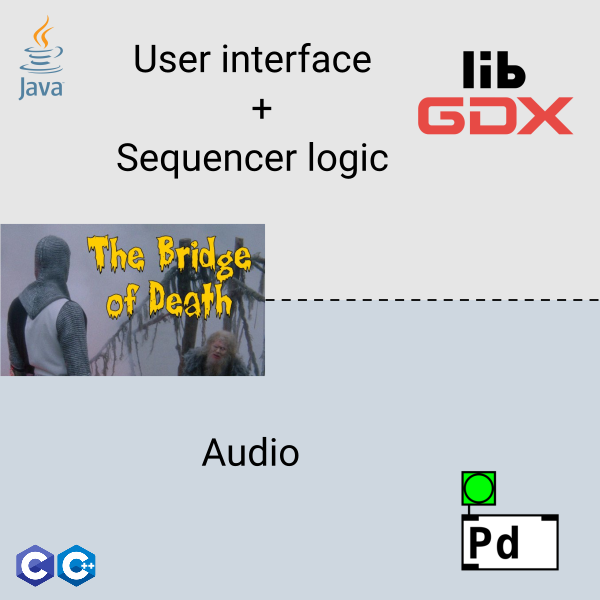

The UI of Sputter is written in Java using libGDX. The audio backend is a Pure Data patch running in libpd. The sequencer logic and associated data structure also lives in Java land, while libPD and the audio drivers are written in C and C++. This means that in order for the sequencer and UI code to be able to communicate, the Java Native Interface (hereafter called The Bridge of Death) has to be crossed.

This is how the basic structure of Sputter looks like:

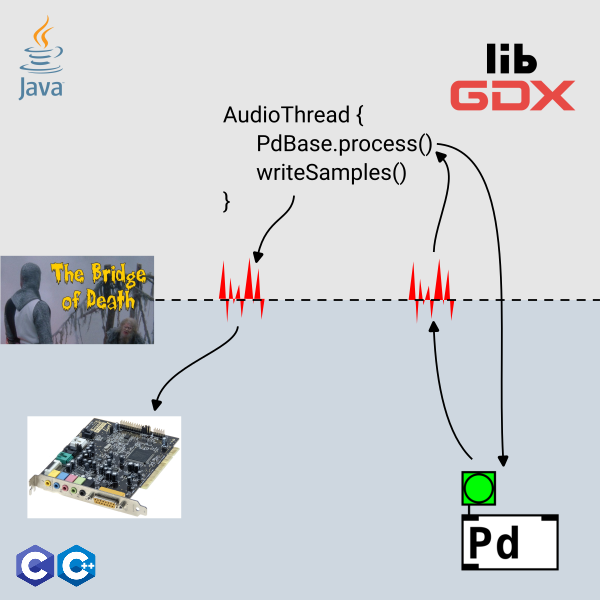

Why call it The Bridge of Death? Well, making JNI calls will hurt performance, which is not ideal for audio applications. I was also told by a Google employee that on Android this alone adds 20ms of latency to the audio signal.

To make matters worse, all processed chunks of audio you hear in the speakers goes through the bridge twice:

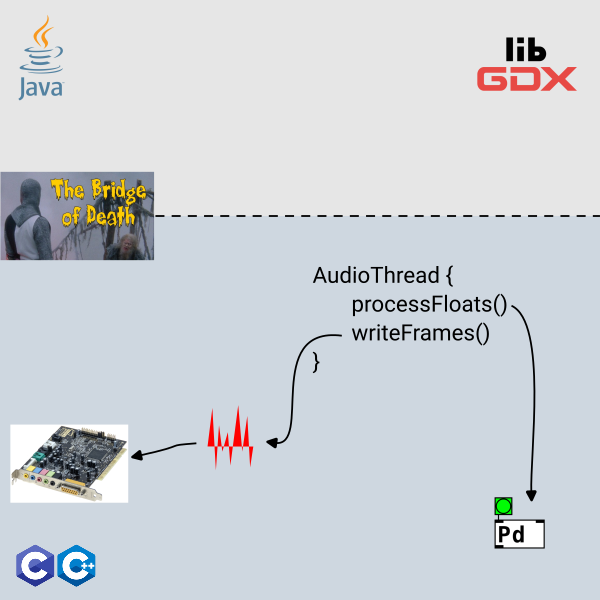

It would be better was to have everything audio related happen outside of Java, by having everything related to the sequencer logic, libPD and audio happen in C/C++ land:

This way there will be no crossing of The Bridge of Death in the audio thread.

Of course there has to be some communication between the UI and audio backend which this still has to take place through JNI, but these will just be short messages occuring infrequently like when a note is inserted. In addition the UI/audio communcation will happen in a seperate thread, which means it will be running at a lower priority and probably on a seperate core altogether.

When will this be done?

The biggest efforts for me will be to learn C++ properly, as well as figuring out the most optimal and best way to communicate between the UI, sequencer code and libPD. The sequencer code itself is actually not that substantial, so once all the underlying components are set up it should be relatively easy to rewrite.

Despite being a relatively substantial undertaking it will probably end up in a mere x.x.1 release. So if the current version is 1.5.0, the one containing these changes will be 1.5.1, making it one of those “bug fixes and optimizations” releases.

It is not easy to estimate a time frame for this, but keep an eye on this blog, and/or the Reddit sub and I will post news as it happens!